Chỉ cần vài chữ, AI của Google đã chỉnh ảnh thật như Photoshop chuyên nghiệp

-

Google vừa cập nhật Gemini Flash 2.5 Image model, cho phép người dùng chỉnh sửa ảnh thật một cách nhanh chóng và tự nhiên chỉ bằng lời nhắc văn bản đơn giản.

-

Model này có tốc độ xử lý cực nhanh: dưới 30 giây cho mỗi yêu cầu, nhanh hơn gấp 3 lần so với GPT-5 của OpenAI trong các thử nghiệm tương tự.

-

Ưu điểm vượt trội: giữ nguyên bối cảnh ảnh gốc, chỉ chỉnh phần được yêu cầu. Ví dụ: thay quần áo nhân vật hoặc thêm hiệu ứng ánh sáng mà không làm rối nền.

-

AI vẫn giữ được khuôn mặt người thật ở mức "nhận diện được", dù đôi khi gương mặt trông hơi giả (bóng nhẫy hoặc nổi gân như da bóng).

-

Ảnh chỉnh sửa được chèn thủy vân kỹ thuật số SynthID, nhưng công cụ phát hiện dấu vết này vẫn chưa mở cho công chúng.

-

Gemini không đưa ra cảnh báo hay giới hạn khi thêm người thật nổi tiếng như Kim Kardashian hay Donald Trump vào ảnh thật — điều mà các công cụ khác thường cảnh báo.

-

Các ảnh có thể dễ dàng bị cắt bỏ dấu hiệu AI, tạo ra nguy cơ bị lợi dụng để tạo ảnh giả gây hiểu nhầm hoặc thao túng cảm xúc công chúng.

-

Một số lỗi vẫn tồn tại: chỉnh mặt người quá trẻ hoặc tạo khuôn mặt hoàn toàn khác khi yêu cầu chỉnh thêm.

-

Các nhà nghiên cứu AI lo ngại vì khả năng phát hiện ảnh giả chưa bắt kịp với tốc độ tạo ảnh AI ngày càng mạnh, đặc biệt khi các hình ảnh này trông thật và có thể lan truyền nhanh chóng.

📌 AI chỉnh ảnh của Google Gemini đang tiến quá nhanh, mang lại khả năng chỉnh sửa ảnh thật cực kỳ dễ dàng và thuyết phục. Dù có thể thêm người nổi tiếng, đổi ánh sáng hay thay đổi trang phục chỉ trong vài giây, nguy cơ tạo ảnh giả gây hiểu nhầm là rất lớn. Công cụ phát hiện thủy vân chưa mở rộng công khai, khiến nguy cơ lạm dụng càng tăng.

https://www.washingtonpost.com/technology/2025/09/01/gemini-flash-nano-banana-ai-photo-editing/

Masterful photo edits now just take a few words. Are we ready for this?

For better or worse, Google’s Gemini chatbot just got an image manipulating upgrade.

Today at 6:00 a.m. EDT

Using artificial intelligence to create images out of whole cloth is nothing new. Using AI to strategically or even surgically manipulate genuine photos has always been trickier — until Google DeepMind leapfrogged the pack with a new tool.

Just ask, and its new Gemini Flash 2.5 Image model, available to play with inside Google’s Gemini chatbot can plop pets into new locales, convincingly colorize monochrome photos and even mark up points of interest in a cityscape.

We all have our share of photos that didn’t turn out quite right. Now editing them artfully no longer requires expertise — just a Google account and the willingness to play supervisor to an AI photo assistant. But how well do Gemini’s new image manipulation skills actually work? We put them to the test.

What it can do

Google’s new AI model — formerly known as “Nano Banana” — is especially interesting for a few reasons.

First, it’s fast. The updated Gemini often churns out edited images in under 30 seconds, while OpenAI’s ChatGPT 5 sometimes took more than three times as long to handle the same requests. (The Washington Post has a content partnership with OpenAI.)

It’s also really good at maintaining a consistent context — ask it to make changes to one part of an image, and it will keep the rest mostly untouched.

Consider this photo my wife took of me in a phone booth in Japan.

(Shara Tibken)

After receiving my simple prompt (“replace this man’s outfit with a bright orange tuxedo and a big showgirl’s headdress”), Gemini spit out this image where I am the only thing that has noticeably changed.

(Chris Velazco/The Washington Post via Google Gemini)

Look carefully enough and you’ll notice that the numbers on the phone’s keypad — along with some of the Japanese text littered throughout the scene — have been transmuted into AI gibberish. But all of the important scene-setting elements remain, even if you ask the AI to tweak the lighting and replace me with, say, a water buffalo:

(Chris Velazco/The Washington Post via Google Gemini)

Gemini is also notable for the way it treats the people in the images it edits: They're (mostly) recognizable as themselves in the results, even if you try to fine-tune those results with even more requests.

Here’s a photo I took of The Washington Post’s future of work reporter, Danielle Abril, before and after I asked Gemini to “surround the subject with neon lights and change the lighting of her face accordingly.”

(Chris Velazco/The Washington Post via Google Gemini)

Even bathed in magenta light, Danielle still looks like Danielle. That’s even true when you ask Gemini to try something a little more involved, like turning her face to look directly at the camera.

(Chris Velazco/The Washington Post via Google Gemini)

Tools like ChatGPT, though, couldn’t quite match Gemini’s performance. Given the same prompts, GPT5 produced results that either skewed too far into unreality or couldn’t keep Danielle’s face looking the same.

That’s not to say Gemini deals with our likenesses perfectly, though. People’s faces tend to take on a slightly synthetic cast, as though their likenesses have been airbrushed. Zoom in close enough on the images Gemini spits out, and you may also spot a sort of slight mottling — to me, at least, it’s reminiscent of the minute bumps on a football.

And sometimes, the tool just doesn’t know how to deal with a request.

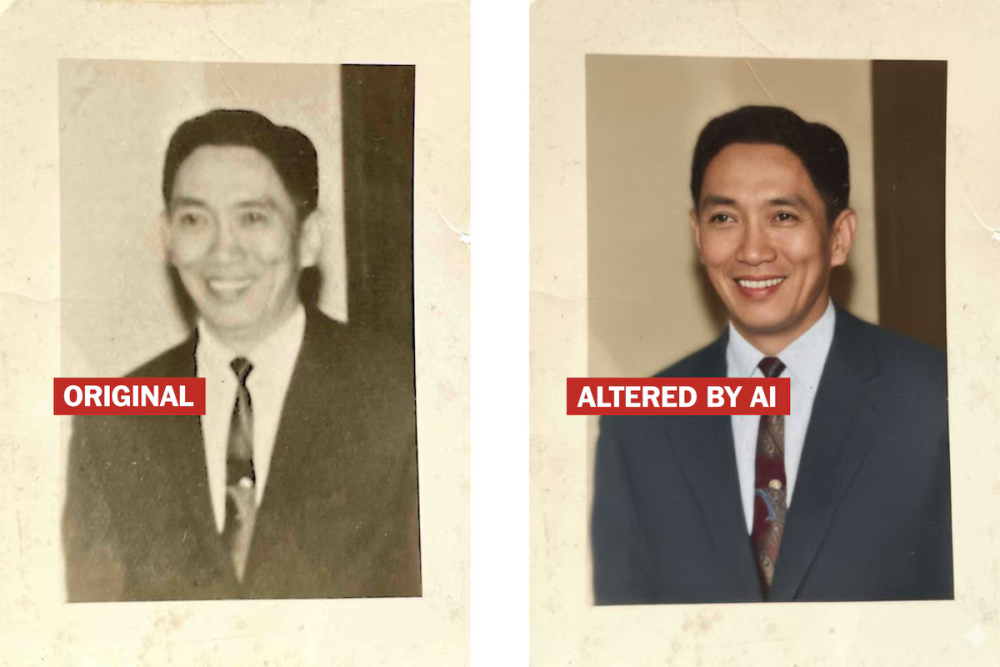

In the example below, I asked Gemini to colorize and sharpen this old, blurry photo of my grandfather — one of just a few my mom still has left after all these years.

Gemini’s first draft looked great in color, but according to my mom, it left my grandfather looking a little too young (middle photo). When I asked Gemini to make his face a little older and a touch more wrinkly, it spit out someone, who to me, looks completely different (right image).

(Chris Velazco/The Washington Post via Google Gemini)

Faux photo fallout

Gemini’s new model clearly isn’t perfect, but it’s fast, effective and accessible enough that people are cooking up ambitious ways to use it. Even so, facets of Google DeepMind’s approach to manipulating images have come as a surprise — and not necessarily a good one — to some AI researchers.

When Vincent Conitzer took Gemini’s new image skills for a spin, the first thing he asked it was to add Kim Kardashian to a photo of Travis Kelce and Taylor Swift at a football game.

“My first reaction was, ‘Wow, that was fast and easy,” the Carnegie Mellon University professor of computer science told The Post. But then the surprise kicked in: Unlike other AI tools, Gemini offered “no pushback whatsoever” when asked to add the likeness of a real person to an otherwise genuine image, Contizer said.

That’s not just true for Kim K., either — Gemini didn’t complain when I had it add Vin Diesel to photos of a friend throwing a Fast & Furious-themed birthday party, and it realistically added Donald Trump to a photo of my very Republican mom without a fuss.

Google and DeepMind did not immediately respond to a request for comment.

It’s not hard to see how this kind of speedy, sophisticated editing could make it easier to generate polarizing images that inflame public opinion across the internet. They might be more likely to pass a quick gut check, too, because Google’s model is happy to leave certain elements of the image basically untouched.

It doesn’t help that detecting instances of AI manipulation in images is still trickier than it ought to be. All of the dozens of photos Gemini created for me were tagged with a tiny indicator in the bottom-right corner to signify AI’s helping hand, but those can easily be cropped out after the fact.

Google also says the images edited with its new Gemini model have special “SynthID” watermark data embedded in them, which can be used to highlight specific AI manipulations.

The catch? The tool for detecting that telltale data, which Google announced in May, is not yet available to the general public.

Conitzer says there are some social guardrails in place here: If an AI-edited image goes viral or depicts something potentially newsworthy, it’s more likely to wind up scrutinized or debunked. What might be more concerning are the AI manipulations that fly under the radar.

“What’s to prevent me from sharing this image with somebody that I know cares about these things a little bit,” he said. “They’re probably not going to check whether it’s real or not.”

If one thing is clear to people like Conitzer, though, it’s that none of this is going away. Despite their potential for social fallout, AI tools are only going to get more capable. What’s less clear is how the rest of us will fare in trying to keep up with it.

“There’s a lot of capital in that space to try to build the next big thing, and I feel like as a society, we’re just kind of reacting to wherever it takes us,” he said. “It doesn’t seem like we’re very much in control of the process.”

As someone who works with photos a lot, it’s been fascinating spending these last few days touching up — or flat-out transforming — my images in such high quality, just by asking nicely. At its best, which you’ll never know when to expect, the results can be ridiculously impressive.

But it’s also true that the ability to convincingly, maybe even maliciously, manipulate otherwise authentic images has just fallen into our laps, and I can’t help but worry about what comes next.

Thảo luận

Follow Us

Tin phổ biến